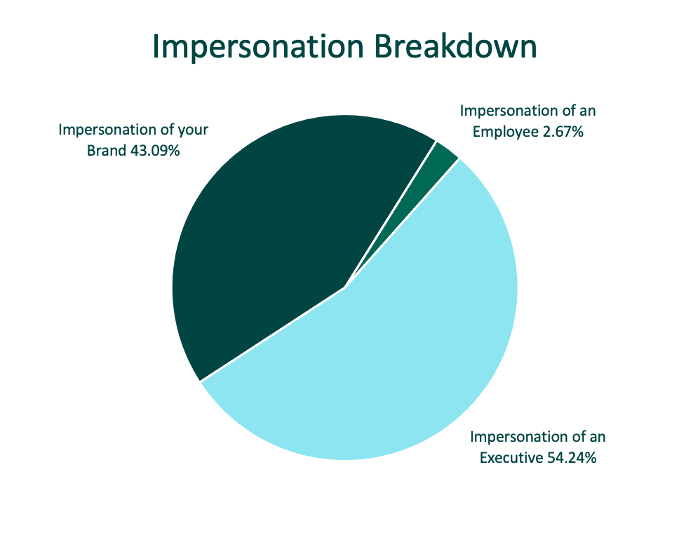

Executive impersonation on social media is at an all-time high as threat actors take advantage of AI to improve and scale their attacks. In Q3, accounts pretending to belong to high-ranking executives on social media climbed to more than 54% of total impersonation volume, surpassing brand attacks for the first time since Fortra began tracking this data. The volume and composition of these attacks strongly indicates they are crafted using generative AI.

AI and Social Media

In the second half of 2023, impersonation attacks as a whole grew to represent the number one threat-type on social media. These threats manifest as fraudulent stores or brand pages, with the majority of the volume materializing as fake executive profiles (see graph above). Fake accounts are easily created via AI, with tools capable of generating the entire lifecycle of a scam.

Social impersonation tactics are evolving fast and they’re more convincing than ever. Executives are prime targets, especially as consumers increasingly rely on social platforms to research companies and interact with individuals who represent the brand. These high-visibility profiles make attractive entry points for attackers aiming to exploit trust and credibility.

AI is adding another layer of risk. While advanced AI isn’t always financially feasible for scammers to deploy end-to-end, widely available tools make it easy to automate activity at scale. This leaves bad actors free to focus on creating accounts and conducting one-to-one interactions, amplifying the reach and credibility of their campaigns.

By automating these attacks, criminals can carry out large-scale fraud more efficiently and at greater scale than would be possible through manual methods.

Executive Impersonation on Social Media

Threat actors exploit the trust people place in high-profile figures, using AI to generate realistic images or videos that promote offers, giveaways, or other enticements. These posts often include instructions to click a link or engage directly with the fake executive via direct message, manipulating victims into taking actions that compromise their security.

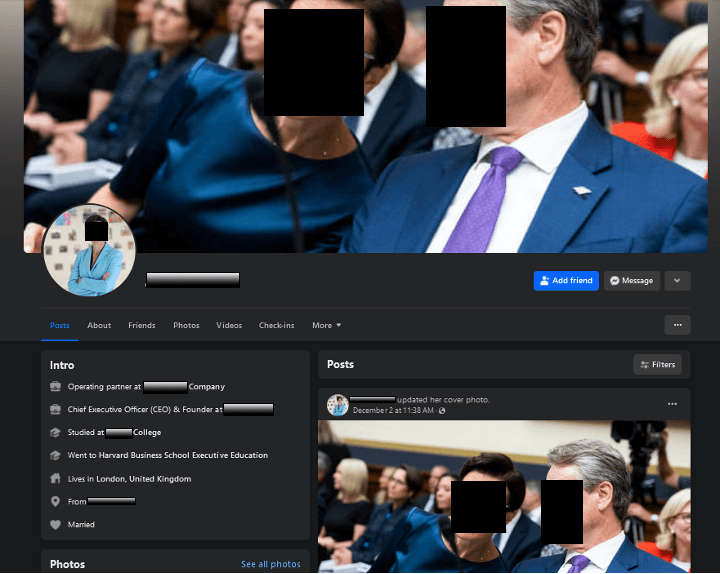

Following is an example of a fake executive account impersonating the CEO of a global financial institution. The profile contains a professional photo and “about” information that is a near exact description of the executive.

AI’s ability to convincingly mimic imagery and speech has made malicious pages and posts nearly indistinguishable from legitimate executive content. As a result, brands are often left scrambling to contain the damage, especially on fast-moving social platforms where broad user reach amplifies the impact of such attacks

The most critical components of executive impersonation attacks are the posts. If the intent of the actor is ultimately to communicate with the victim, any comments will be replied to either by AI or the actor themselves. By replying to the comment, the account is further legitimized, and the user will then be encouraged to engage in direct messages.

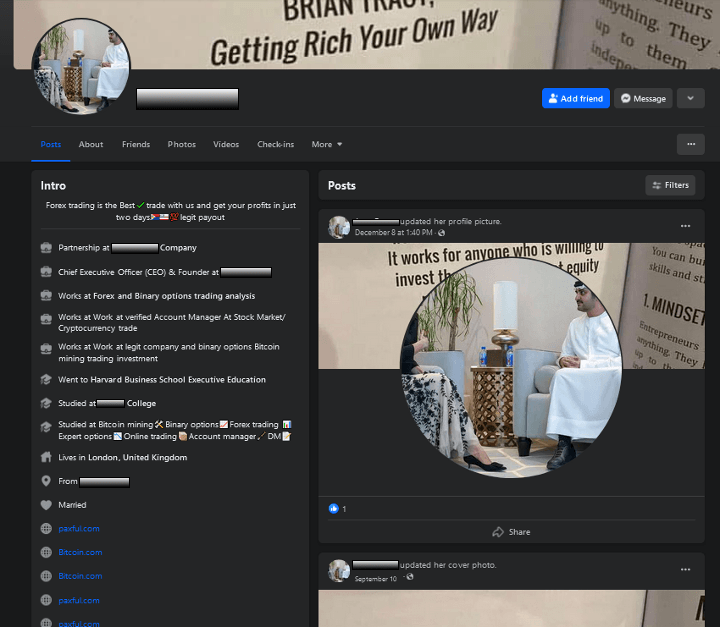

Following is a fraudulent executive profile impersonating the CEO of a global financial institution. It includes links to bitcoin-related sites, aiming to deceive users into visiting them based on the false endorsements of the fake executive.

Social scams operate within a narrow window of opportunity. Once a post or direct message is flagged as suspicious, the credibility of the entire account can quickly unravel. To maximize their impact, threat actors design content that appears legitimate and endorsed by others.

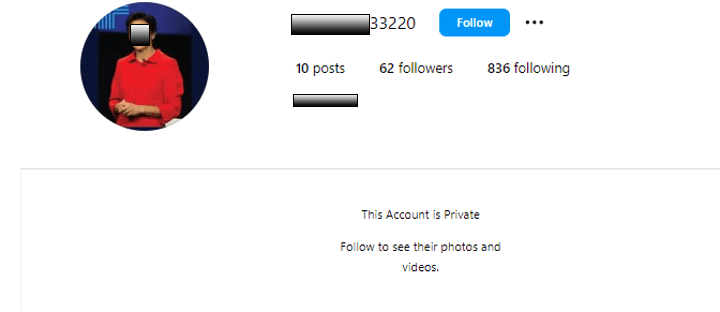

They often begin with private accounts, allowing them to “age” without attracting attention. AI-generated fake profiles are then deployed to interact with the original content, creating the illusion of engagement and validation. By mimicking real individuals, these coordinated networks can deceive both users and automated security systems, amplifying the scam’s perceived authenticity.

Executive impersonation by industry

Executive impersonation pages will look different depending on the industry targeted. For instance, attacks on financial institutions focus on stealing credit card information, account data, or taking money directly from your account. Retail is a prime target for counterfeit, as threat actors recognize consumers increasingly research brands and product lines through the lens of influencers on social platforms. Other popular industries targeted include cryptocurrency, computer software, and ecommerce.

Situational risks for executive impersonation

Security teams need to track whether executives maintain an active social presence while simultaneously monitoring for impersonation attempts. Certain scenarios create opportunities for bad actors, often without the executive or brand realizing it:

Former executives with active old accounts: Profiles tied to previous roles can still display outdated logos and brand information, lending credibility to imposters who exploit the connection.

Executives without active profiles: When no legitimate profile exists, consumers have no reliable source for information, leaving a vacuum that impersonators can easily fill.

Proactive management of executive social presence — both current and former —c an reduce confusion and limit opportunities for impersonation.

Identifying AI-Generated Content

AI-generated impersonations are increasingly difficult to detect, as AI can replicate the subtle nuances of human behavior with striking accuracy. While detection tools exist, they remain limited, especially when it comes to deepfake videos, and social platforms rarely catch these threats in time. For now, identifying such scams still depends heavily on human expertise, particularly those who understand both the intricacies of AI and the complexities of human communication.

AI identifiers include:

1. URLs for accounts with misspelled or manipulated names for executives, such as:

- https://www.instagram.com/xxx_efra137/

- https://www.instagram.com/xxx_efra485/

- https://www.instagram.com/xxxx_fasrer_7878/

- https://www.instagram.com/xxxx_fr14/

- https://www.instagram.com/xxxx_fra116/

- https://www.instagram.com/xxxx_fra16/

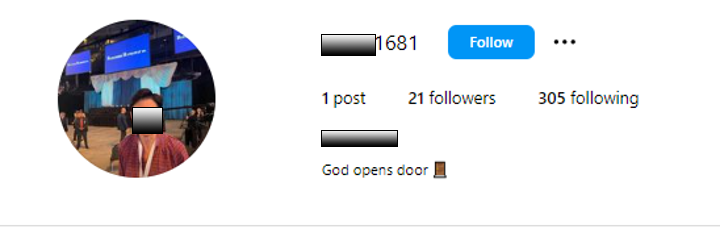

2. Multiple accounts using the same photo of an executive:

3. A canned phrase in the profile of the account repeated in other accounts:

Other identifiers that security teams should look for:

Copy

- As a general rule, posts and conversations will lack emotion or empathy traditionally characterizing human speech.

- English is too perfect

- Repetitiveness or repeating the same response to questions. Generally, this behavior is more indicative of a bot. AI is getting better at not repeating.

- Lacking the ability to identify/use common human idioms such as slang, shortform, or buzzwords

- Plagiarism

Images/videos

- Fuzz around humans

- Anomalies within the image

- Identifiers within the metadata such as location, date/time created

- Metadata may show if the image was edited by a program

- Visual patterns that repeat artistic styles, making images too perfect

- The size of objects in comparison to individuals may be too small or too large.

Threat Creation

Threat actors can abuse legitimate AI tools to launch online threats just as easily as content creators can apply them to benign social media campaigns. There are many tools leveraging artificial intelligence that automate the generation of creatives and tasks on platforms such as Meta, LinkedIn, X, and more. These tools can connect social channels, turn content ideas into multiple posts, generate video and text, schedule campaigns, and more.

Examples of legitimate software that may be abused for malicious purposes:

- FeedHive

- Vista Social

- Buffer

- Flick

- Publer

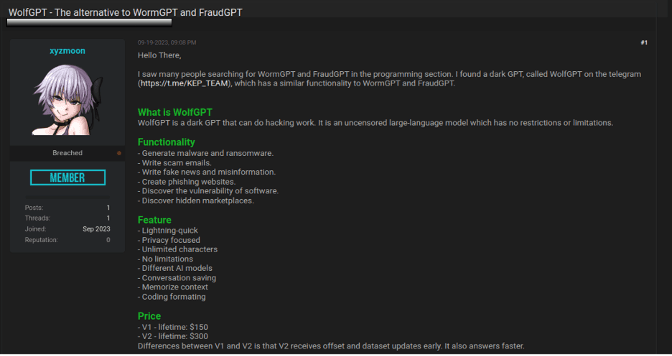

AI-powered chatbots are widely promoted across dark web forums as uncensored large language models capable of bypassing the safeguards found in mainstream services like ChatGPT. These unrestricted tools are offered through monthly, annual, or lifetime subscriptions and sometimes even distributed for free. Threat actors use them to support a range of malicious activities, including:

- Generating malware

- Ransomware

- Writing language for phishing emails

- Creating phishing pages

- Detecting vulnerabilities

According to Nick Oram, Operations Manager for Fortra’s Dark Web & Mobile App Monitoring services, the claims made by these tools cannot be confirmed, nor can we determine with 100% certainty their effectiveness in creating working malicious tools or services.

“However, it is important for cyber security research teams to be cognizant of the threats posed by AI chatbots and the actors exploiting these tools for fraudulent endeavors,” adds Oram. “These tools will only continue to become more sophisticated.”

Following is an example of an AI chatbot advertised over a popular dark web forum.

Today’s consumers expect brands to show up and engage across major platforms like TikTok, Facebook, Instagram, X, and YouTube. That includes both official brand accounts and executive profiles. Cybercriminals know this and actively target these channels for impersonation and deceptive interactions. The consequences range from consumer confusion to financial loss and long-term damage to brand reputation.